A lot has already been written on Siri, most of it pretty polarized — either of the “it’s a gimmick nobody uses”, or the “wait, but I use it!” variety. I don’t mean to throw fuel on the fire, but I keep trying to use Siri and haven’t found it to work well enough to be worth the effort; here I’ll present some examples which are both edifying and amusing.

(There’s one glaring exception to “I haven’t found it to work well enough”: for straight dictation, it’s uncannily accurate. If it starts and stops listening at the right time and doesn’t encounter a network error — that is, when it works at all — it almost always transcribes exactly what I said. It’s strange to me that Apple brands the dictation feature as part of Siri, because to my way of thinking they’re entirely separate layers — dictation or transcription for turning spoken sounds into text, and then Siri for assigning a meaning to that text and acting on the meaning — but as far as I can tell, Apple considers dictation to be a Siri function, so I have to give her credit: she’s great at understanding the words I say.)

In the following real-usage examples Siri, while great at understanding what I say (in all these cases the transcribed query is exactly what I meant to say) is not so great at understanding what I mean.

Flight status:

“I can’t help you with flights.” Actually she did understand what I meant, but doesn’t know how to help. That’s too bad; that seems like a really natural function for a personal assistant, and one that lends itself well to machine structured representation and constrained search. I expected Siri would knock this one out of the park.

Appointment reminder:

“3 weeks from today” gets interpreted as “1 week from today”. (This screenshot was taken on 2/9, as you can see from the blue marking, and 2/16 where Siri put the appointment is decidedly not 3 weeks later.) Again, Siri is often credited (and advertised) as being good at setting up appointments; I expected her to knock this one out of the park.

Another appointment reminder:

“5 weeks from today” gets interpreted as “tomorrow”. Otherwise, so close.

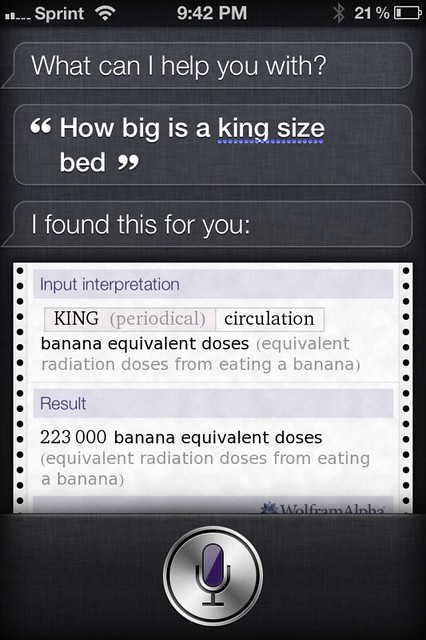

Knowledge question:

This one is more of a stretch, and I wouldn’t have been surprised had Siri fallen back on generic web search, but instead she tries to interpret it and get the answer from Wolfram Alpha, so points for that. But — and the reason this rates inclusion in this post — the interpretation she (and/or Wolfram) chooses is straight up bizarre. (I really had to scratch my head to deconstruct this. It decided that “king” refers to a magazine which apparently has a circulation of 223,000; it decided that “bed” is an abbreviation for “banana equivalent dose”; I have no idea why or how it thinks those concepts are related. If you actually go to wolfram alpha and search for “king size bed”, it actually understands the topic is “bed sizes”, though it has no information. If you search for “king bed”, it does take “bed” to mean the radiation unit, does not take “king” to mean the magazine, and clarifies “assuming king is a unit” while offering to “use king bed as a bed size topic instead”. So I think Siri deserves the credit for taking this from crazy to awesome.)

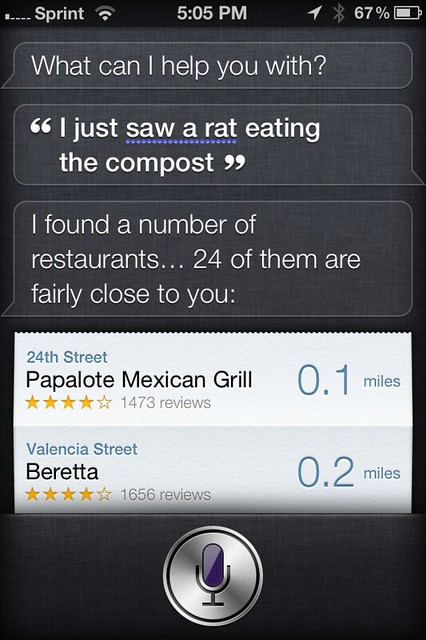

And finally, accidental bonus humor:

OK, this wasn’t meant for Siri’s ears at all (it’s not even a question); in fact I thought I was dictating a text message in the Messages app. (Having input focus in the wrong spot is a strange and niggling complaint for a touch platform like iOS, but this is what happens.) Siri’s interpretation of why I’d be telling her about a rat eating my compost is, however, both noteworthy and disturbing, especially for the establishments it recommends (neither of which deserve this treatment, I can vouch for).

On a final note, I’ll reiterate that these are not contrived examples; excluding the compost-eating rat which was a mistake, this list represents the last 4 times I tried to use Siri with real intent, and at least 3 of these are clearly in her domain. With results like these, I don’t know that I’ll keep trying.